It seems that the Sony A7Rm4 has raised the same questions I get every time we get cameras with more pixels: have we out-resolved lenses? Are more pixels useful?

Let’s start with the last question first: yes, more pixels are useful (each of the above images is four times as many pixels as the previous). This is always true if you’re aspiring to best-possible-data and best-possible-results. Assuming that all else is equal, in the analog to digital world more sampling (more pixels per inch) is always better than less sampling (fewer pixels per inch).

In theory, more sampling gives you a closer approximation to the actual real world data. This was easy to understand with audio. The classic example was to show a sine wave sampled at different frequencies. If your sample is low enough, you might only see the peak and valley values and nothing in between. If your sample was high enough, you start to see the shape of the sine wave and can clearly distinguish it from other kinds of waves.

That said, in a perfect digital camera world, your camera would take multiple images (which factors out the quantum shot noise), focused at different distances (which builds a deep focus box instead of plane), exposed at different values (providing better shadow and highlight information), and pixel shifted (to remove the Bayer demosaic aliasing). And it would do that with as many sample points (photosites) as possible.

But what if something is moving in that scene? Well, your post processing software would have enough object recognition capability to detect the moving ones and pick (and perhaps build) the best stable view of each, while still using all the other data it could for the non-moving pieces.

So, yes, I’d rather have 61mp than 45mp, and 45mp than 36mp, and 36mp than 24mp. All else equal. Likewise I’d rather have focus stacking than not, HDR than not, and pixel shift than not ;~)

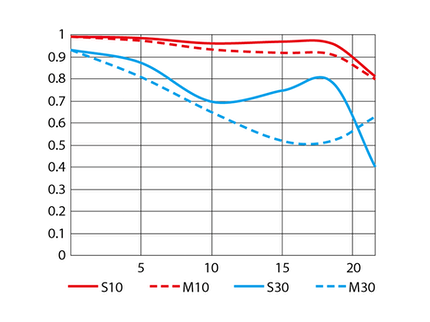

Meanwhile, we have the resolution issue, particularly with lenses. Most people use a surrogate for resolution, and it’s a poor one: MTF value at some line width. Moreover, the manufacturers almost all only show MTF values as calculated, and at fairly low detail levels of 10lppm and 30lppm. Many independent testers find that their printed test chart dictate the maximum number that can be obtained. That all provides a number. That MTF number is NOT resolution.

Does the above chart show good resolution or bad?

Resolution is actually determined by a compound equation. That means that a whole chain of things are used to actually calculate total resolution, including but not limited to sensor pitch, lens MTF, demosaic aliasing, and more.

What I tend to talk about is which of the many factors is the one most limiting the resolution capability of any imaging “system.” With the top level modern lenses and full frame sensors, it’s not the lens. Not yet, at least. And certainly not at the central area of the lens.

The real question you need to answer in both sampling and resolution is whether or not you can see the difference. I think we passed that point for most people some time ago, maybe at 24mp full frame with a 21st century lens. The 20% increase in linear sampling of the 61mp sensor over the 42mp sensor is not enough for some people to clearly see, and because resolution is a compound factor, if you put a lower cost, older, and/or lesser designed lens on the 61mp camera, you very well might see no change.

There’s a reason why everyone is redesigning lenses. What used to be the primary gating element in the resolution equation (the sensor) has slowly evolved into what we have now, and it no longer as much of a gating element.

Think of film for a moment. While there were some strides made towards reducing grain size, there still seemed to be a fairly narrow and finite limit to what could be done. Grain reduction did not progress in nearly the way we’ve seen digital sampling increase. You could design a perfect lens and the film structure might simply be the gating element that would dictate what result you could attain. This led to lens designers emphasizing other aspects of their lenses than absolute resolution.

Image sensors for digital cameras are no longer close to being the gating element. Back when the D1 was the first DSLR at 2.5mp, yes, the sensor was a primary gating element of total system resolution.

Meanwhile, the new Sony camera has 3.76 micron photosites. We have image sensor technologies in smartphones that are under 1 micron. What that means is that we could probably create a full frame sensor that has four times the linear sampling ability as the A7Rm4! (Moving all that data quickly would be the issue that keeps you from doing it.) In other words, we have a long way we can still improve with the image sensor portion of the resolution equation. That’s why lens design has upped its game: the optical design groups—Canon and Nikon being two with a deep and wide ability here—don’t want to find themselves a limiting element (pardon the pun) in what cameras can or can’t do.

There’s a ton of emphasis on sensor importance these days, mainly because almost anyone can understand that 12>6, and 24>12, and 42>24, and so on. Worse still, many don't understand linear versus area math, and make erroneous statements about the bigger number.

Sony is primarily an electronics company (as opposed to optical like Canon/Nikon), so it isn’t at all surprising that they lead with their core and highlight the electronics (sensor).

But it takes two to tango (actually more, but that ruins the metaphor ;~). Optics have to run in lockstep with sensor capability in order to push the overall ability upwards.

Short version: if you’re not buying top lenses, you probably shouldn’t be buying high megapixel count cameras. And vice versa.

The “good enough” point for most full frame purchasers is almost certainly the 24mp cameras and a modern convenience lens (e.g. 24-105mm f/4 for Sony FE, 24-70mm f/4 for Nikon Z). Your FOLO (fear of losing out) is what makes you think you need 61mp.

Finally, one thing I haven’t pointed out: I don’t think Sony has done anything to reduce their file sizes to the level that Canon and Nikon have. That means you’re going to be generating one heck of a lot of data if you shoot the A7Rm4. The next complaints we'll start hearing will about having to increase their card size, memory, and computer processing power just because they bought a new state-of-the-art camera ;~).

It may not seem like it reading the popular photography Web sites, but do you know what the most popular camera/lens combos have been for quite some time now?

I'll wait for your answer...

...waiting...

...waiting...

It's 24mp APS-C with a kit lens, and by a large margin.

Why? Because it's "good enough" and far cheaper than a 61mp full frame camera and a high-end lens.

Update: a few smart folk pointed out that there are limits in digital sampling where you probably won't get additional benefit; that the signal will be well enough sampled. My point is that we haven't hit that yet. Not even close, that I can see. So for the time being, more sampling is always better still.